Hot on the heels of Yahoo’s Yslow, Google have published Page Speed, a tool they have been using to optimize their own web pages. Now you can use it too.

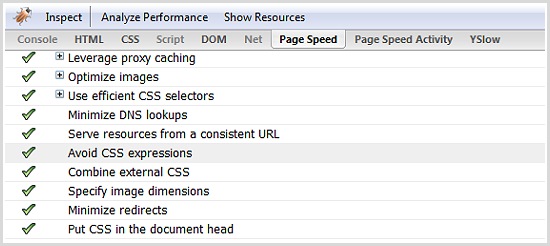

Page Speed is similar to Yslow in several respects: it’s an add-on to Firebug; it analyses your pages according to a set of performance rules; it draws attention to rules that you score badly on; and it also provides a page activity monitor.

For each rule, Page Speed gives you a general indication of how well you’re doing, in the form of a green tick (good), red circle (bad), or amber triangle (indifferent). You can also hover over a rule to see your percentage score. Page Speed does not provide an overall percentage score, but it does arrange the results in order of importance.

Since my previous article about Yslow 1.0, Yslow 2.0 has been released; this includes a further 9 rules. Let’s now take a look at all these rules—both Page Speed and Yslow.

Page Speed rules shared with Yslow 1.0

These rules are the same as those I previously discussed, although their organisation is different. I’m not going to cover these rules again, but you may still find some of Page Speed’s documentation details interesting.

- Avoid CSS expressions

- Combine external CSS

- Combine external javascript

- Enable gzip compression

- Leverage browser caching

- Minify javascript

- Minimize DNS lookups

- Minimize redirects

- Parallelize downloads across multiple hostnames

- Put CSS in the document head

Page Speed may sometimes advise you to do stupid things. For example, under the “Leverage browser caching” rule, Page Speed suggested that I make __utm.gif cacheable. This tiny gif is used by Google Analytics to help compile your statistics; if you make it cacheable, Analytics will fail to track visitors who retrieved it from a cache. Leave it alone!

Page Speed rules shared with Yslow 2.0

I haven’t yet discussed these rules, so let’s look at them now:

- Minimize cookie size

- Serve static content from a cookie-less domain

- Specify image dimensions

Minimize cookie size

Google’s advice here is more specific than Yslow’s: keep the average cookie size below 400 bytes. I get perfect marks on this from both tools, so I haven’t investigated further.

Serve static content from a cookie-less domain

I score badly on this one, and I expect many other sites will too. Surprisingly, requests for components such as images also include cookies, and these are generally just useless network traffic. The best way to fix this is to use a CDN; obviously, this will also cause you to score well on Yslow’s “Use a CDN” rule.

And yes, this means I’ve changed my mind about CDN’s. Some good, cheap CDN’s are now available, such as SimpleCDN or Amazon Cloudfront. I use SimpleCDN, and have found them to be okay; but I’m not happy with their changing their service offering at short notice, and their Lightning service is currently not working for me—hence my poor score on this rule!

Specify image dimensions

Lazy designers often take a large image and use HTML to squash it down; consequently, the file size can be much larger than is necessary.

Don’t use HTML to resize images; use a graphics program. The width and height attributes in your HTML tag should exactly match the size of the image. Doing so will also give the best appearance, as the browser does not need to scale the image.

I get a perfect score on this, and there’s no excuse for anything less.

Rules unique to Page Speed

In some cases, Yslow’s guidelines may include these topics, but not in the form of an automated check on your web page.

- Defer loading of javascript

- Optimize images

- Optimize the order of styles and scripts

- Remove unused CSS

- Serve resources from a consistent URL

- Leverage proxy caching

- Use efficient CSS selectors

Defer loading of javascript

Before you can test your website against this rule, you must enable “Profile Deferrable Javascript” in the options. You may want to disable it again when you’re done, as it can slow down Firefox. Moreover, this profiling is only accurate on your first visit: to get an accurate result, you must start a new browser session and run Page Speed directly you load your website (before loading a second page).

The idea behind this rule is that javascript slows down your pages even when it’s not actually being used. Even if the script is cached, the browser must load it from disk and execute it. Some javascript functions need to be available before the onLoad event; others don’t. This rule proposes that you split off these latter functions into a separate file. You can then use some trickery to “lazy-load” this javascript after the document has finished loading.

It’s unclear to me whether this lazy-loading is better than simply putting your script at the bottom of the page. If your script is at the bottom, then it will still need to be downloaded and evaluated before the onLoad event is fired (I think), and lazy-loading will bypass this limitation; but what if you’re using a framework such as jQuery, which has the more sophisticated onDomReady event? To be honest, I don’t yet know enough about this issue to make simple recommendations. I suspect, however, that lazy-loading is even faster than simply putting javascript at the bottom of the page.

The good news is that Google Page Speed will identify these uncalled functions for you. Ironically, although I had plenty of uncalled functions, they all came from Google’s own ga.js; I’m not sure I want to mess with that, as it may screw up my Google Analytics stats.

Optimise images

Page Speed automatically creates optimised versions of your images, and offers a link to them. This is similar to running your images through Smush.it.

Optimise the order of styles and scripts

Ideally, you wouldn’t include any javascript in the

, as this violates Yslow’s rule, “Put javascript at the bottom.” If you need to include scripts in the , however, try to get the order right. External scripts should come after all the external stylesheets, and inline scripts should come last of all.Why does the order matter? Because javascript blocks subsequent downloads. While your javascript is downloading and being evaluated, the stylesheet that comes after it can’t be downloaded. Check out the documentation for more details.

Remove unused CSS

I score 100% on this one, which is surprising given that my CSS is an overgrown, tangled thicket of complexity that desperately needs pruning.

Every CSS rule adds bytes to be downloaded, and also requires parsing by the browser. Obviously it’s good to remove dusty old rules that you never use, but even after doing so you may still have a single monolithic CSS file that styles a diverse range of pages. As a result, every page gets a large amount of CSS that’s not needed; if your site is like this, it may be more efficient to split your CSS across multiple modules (although this increases HTTP requests).

Clearly a trade-off is necessary here. I recommend keeping a consistent style as much as possible; apart from the speed benefits, consistency helps visitors and generally looks more professional than constantly changing styles. For large sites with many different types of pages (such as Yahoo), however, it’s often better to split CSS into modules.

Even if your site only needs one stylesheet, it’s a good idea to start thinking in terms of object-oriented CSS, because this makes your CSS simpler, shorter, and more flexible; for an expert explanation of the topic, see Nicole Sullivan’s presentation.

Serve resources from a consistent URL

This one is fairly obvious. To benefit from caching, we need to keep the URL consistent. For example, if on different pages you serve the same image, but from two different domains, then it will get downloaded twice instead of being read from cache.

For most people this shouldn’t be issue; it’s most likely to apply if you’re doing something fancy and automated to split your content across multiple hostnames.

Leverage proxy caching

Now this one is clever. When people visit your website, its resources can be cached not only by them but also by their ISP. Then when another visitor comes via the same ISP, he can download a copy from the ISP’s cache—which will be faster, because it’s closer to him than your server. Page Speed’s documentation recommends that, with a few exceptions, you set a Cache-control: public header for static resources (such as images).

Be careful not to do this for resources that have cookies attached, as you may end up allowing proxies to cache information that should be kept private to a visitor; the best solution is to serve these resources from a cookie-less domain. Also be careful with gzipping: some proxies will send gzipped content to browsers that can’t read it.

I’m not sure how this rule interacts with the use of CDN. Again, this is one I don’t understand well, and I’d welcome discussion on it.

Use efficient CSS selectors

This rule is controversial. The idea is that some CSS selectors are much harder for the browser to parse than others; the most efficient are ID and Class selectors, because these do not require the browser to look higher up the document tree to determine a match.

With this rule, Google is recommending a radical change in the way we write CSS. Specifically, they are suggesting that we add otherwise unnecessary ID’s and classes to the markup, in return for a speed advantage. As an example, they consider the situation where you want different colours on ordered and unordered list items:

ul li {color: blue;}

ol li {color: red;}

That would be the usual way to do it; instead, Google recommends adding a class to each

.unordered-list-item {color: blue;}

.ordered-list-item {color: red;}

No doubt this is faster, but it also takes longer to write and imposes a maintainance burden in your markup. If there were tools that would automatically generate such optimised CSS and the accompanying markup, then it might be worth doing. I suppose you could use server-side coding to generate the markup—for example, using the HTML helper from CakePHP—but this seems a heavy-handed approach.

My scepticism over this rule was initially quashed by the towering authority of Google, but then I looked around to see whether there was any research on the subject. The most respectable tests I could find came from Steve Souders himself, in his post about the performance impact of CSS selectors. Steve found that, in real-world conditions, the maximum possible benefit of optimising CSS is 50 ms; and for 70% of users (i.e. those running IE7 or FF3), it’s only 20 ms. These numbers were obtained with 6000 DOM elements and 2000 extremely inefficient CSS rules. This is pretty much a worst-case scenario; most sites, even complex ones, will have far fewer DOM elements and CSS rules, and their CSS will also be much simpler.

Steve concludes that the potential performance benefits are small, and not worth the cost. I’m inclined to agree, but I’d welcome more information.

Nevertheless, there’s no harm in getting into good habits: some of Google’s recommendations for CSS selectors are quite reasonable, such as not over-qualifying ID and class selectors with an antecedent tag selector (so .errorMessage is better than p.errorMessage). Such coding habits also sit harmoniously with object-oriented CSS.

If you read Steve’s post, be sure to check out Nicole Sullivan’s comment: “Micro-optimization of selectors is going a bit off track in a performance analysis of CSS. The focus should be on scalable CSS.” To me, this seems a much more sensible and maintainable approach than the monomaniacal one recommended by Google.

I do extremely badly on this rule (0%). Although I consider the recommendations to be unrealistic, my terrible score does reflect the excessive complexity and lack of modularity within my CSS.

Rules unique to Yslow 2.0

In some cases, Page Speed’s guidelines may include these topics, but not in the form of an automated check on your web page.

Note that Yahoo’s documentation includes other recommendations that are not checked by Yslow (because they haven’t discovered a sensible way to automate the test). Yslow has 22 rules, but Yahoo lists 34 best practices in total.

- Reduce the number of DOM elements

- Make favicon small and cacheable

- Avoid HTTP 404 (Not Found) errors

- Avoid AlphaImageLoader filter

- Make AJAX cacheable

- Use GET for AJAX requests

Reduce the number of DOM elements

The more DOM elements you have, the longer it takes to download your page, to render it, and to play with the DOM via javascript.

Essentially, this rule asks you to avoid large amounts of unnecessary markup, including markup added by javascript. As an example, yahoo.com has about 700 DOM nodes, despite being a busy page. My home page has 267 DOM nodes, and that could be reduced a lot. You can check how many nodes your page has, by entering the following into Firebug’s console:

document.getElementsByTagName('*').length

Blindly applying this rule can be dangerous (and that’s true of many performance rules). Don’t cut off your nose to spite your face! Markup purists will take this rule as a vindication for using the absolute minimum of markup, and in particular for avoiding the use of container

By all means remove extraneous markup, and also try to limit the complexity of DOM access in your javascript (for example, avoid using javascript to fix layout issues—here I have sinned). But never be afraid to throw in an extra container

Make favicon.ico small and cacheable

You might think that a favicon is not even worth the HTTP request, but you don’t get a say in the matter: the browser is going to request it anyway. Make one, make it small, and put it in the root directory of your website (where the browser will look for it).

Because you can’t change the name of this file—it must be called favicon.ico, or it won’t work—you should be moderate in setting its expiry date. It’s hardly essential that your visitors immediately get your latest favicon, but equally you wouldn’t want it to be cached for 10 years! I give mine a two-month shelf-life.

Avoid HTTP 404 (Not Found) errors

This one is obvious. If your document has broken links, fix them.

Avoid AlphaImageLoader filter

Ah, good old alpha-transparent PNG’s; how we love them! What web designer hasn’t flirted with multi-layer scrolling transparencies at some point? And who has not felt a sense of satisfied mastery, upon forcing IE6 to eat them via a clever hack?

The sobering reality is that, although you can make alpha-transparency work in IE6, you pay a heavy price for doing so. All the hacks rely on Microsoft’s AlphaImageLoader filter. Not only does this filter block rendering and freeze the browser while it’s being calculated, but it also increases memory consumption. To make matters worse, the performance penalty is incurred for each element, not for each image. For example, let’s say you have a fancy alpha-transparent bullet point image for your unordered list items; on a page with 20 bullets, you get the penalty 20 times over.

Use PNG-8 transparency instead, if you can. Incidentally, creating a web page from multiple layers of transparency is probably a bad idea anyway: even in good browsers, these kinds of pages are sluggish to scroll; find a better medium for expressing latent op art.

Make AJAX cacheable, and use GET for AJAX requests

I can’t pretend to understand these rules properly, having never used AJAX. Nevertheless, the ideas are straightforward.

If a resource has not changed since it was fetched, we want to read it from cache rather than getting a new copy; this applies just as much to something requested via AJAX. Steve summarises it thus:

Even though your Ajax responses are created dynamically, and might only be applicable to a single user, they can still be cached. Doing so will make your Web 2.0 apps faster.

Apparently, GET is more efficient than POST, because it can send data in a single packet, whereas POST takes two steps: first send the headers, then send the data. Providing your data is less than 2 kB (a limit on the URL length in IE), you should be able to use GET for AJAX requests.

Conclusions

Google Page Speed is a useful new tool for optimising your websites’ performance. However, some of its advice can be misleading—in particular, CSS selector efficiency is a red herring that distracts you from the more useful goal of building object-oriented CSS.

Yslow is the more mature tool, and I recommend you give it priority. After you’ve finished with Yslow, you may be interested in what Page Speed has to say.

Fantastic Mike. I am still yet to use either of these products but there is info apart from the speed summaries that I find helpful in here.

Very helpful comparison of YSlow and Google’s equivalent, thanks Mike, just what I wanted.

Great article!

Check out http://pageshow.jaoudestudios.com to capture your historic results in your own online private portal.

This guide is just amazing. I am going to implement most of them.

Amazing post, honest!

Amazing post, truly!

http://en.wikipedia.org/

Great post, truly!

http://www.ecigator.com/

Amazing post, honest!

http://cypruspropertyplaza.com/

Hiermit mal ein Dank für Deinen Post!

http://www.ipcounter.net/

Amazing post, truly!

http://www.guitar-learntoplay.com/

Great post, honest!

http://worldtravelguides.cz.cc/2011/komodo-island-is-the-new-7-wonders-of-the-world/

Come join today, great upcoming general forum!

http://cooll.net/

This is your best post yet!

http://www.privatenursingschools.net

This is your best post yet!

hubpages.com/hub/How-To-Find-The-Perfect-Beer-Fridge

This is your best post yet!

http://use-the-future.blogspot.com

To jest to czego szukasz teraz! Dobry post!

http://www.zzzzmorbe11.mor.bedzin.pl

This is your best post yet!

http://www.butikonlinemurah.com/

This is your best writing yet!

http://www.privatenursingschools.net

This is your best housekeeping toy topic yet.

http://www.housekeepingtoys.com/

Best personalized photo tours to any destination in Latin America.

http://www.phototoursandtravel.com/